Looking at Google drive, it seems to not generate the root folder, here are links: (example links that work regardless, with no files in root folder: **External links are only visible to Support Staff****External links are only visible to Support Staff**, with files in root folder: **External links are only visible to Support Staff****External links are only visible to Support Staff**) Thanks, I'm wondering if you can add it to mediafire, mega, google drive/docs ( and ) and onedrive as well.įor mediafire it seems to work unless if the main folder has no files in it, for example compare this link which works in that matter:įrom my testing with mega it seems to implement the root folder as desired regardless of whether or not there is a file in it, so I do not think any change needs to be made. In Crawling plugin you can enable *Include Root Subfolder*. After next update, Settings-Plugins, search for dropbox. I've added special support in DropBox Plugin for this. The root does have correct packagename but no subfolder because it is root (start of the adding process) Packagename and subfolders are different things.Įach Dropbox folder has a packagename and the subfolder just specifies the relative path of that package. Unfortunately I don't quite have time to pin down a link for the [ error but I'll try to get it to you later if you need it.Īlso thanks for all your work on this program.

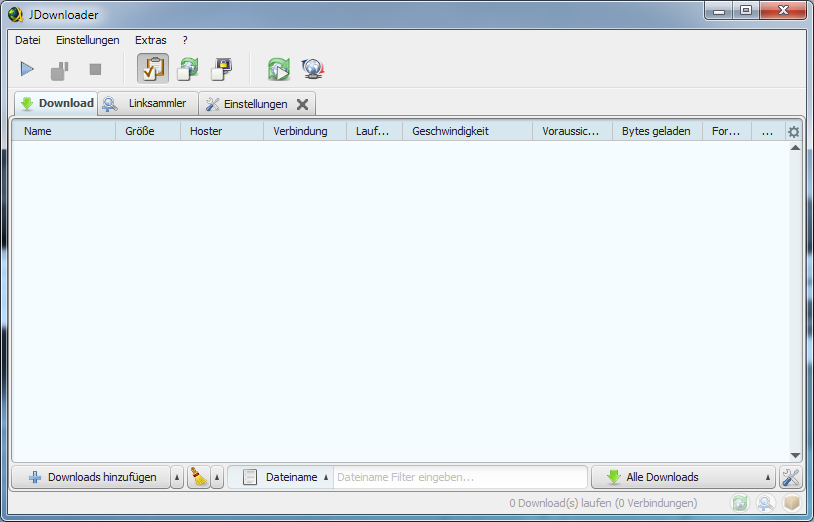

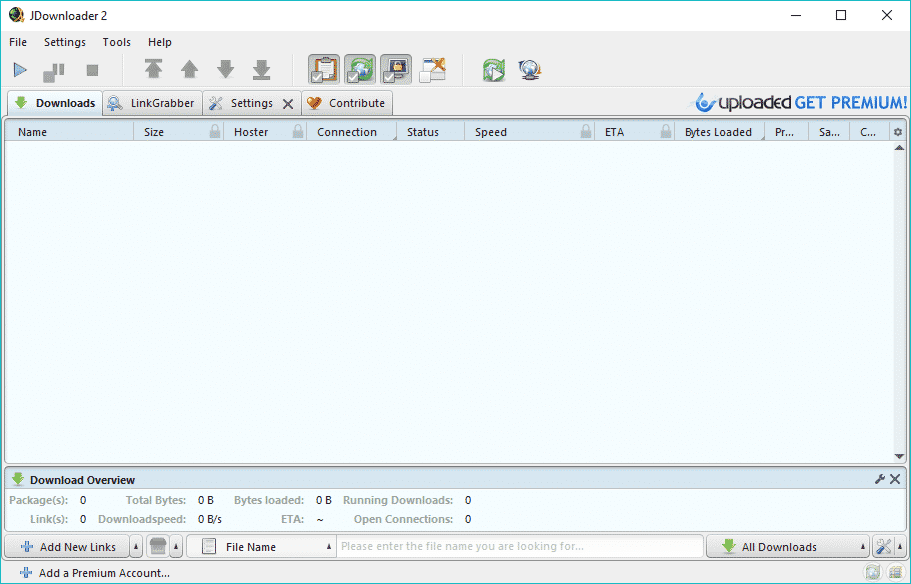

Jdownloader folderwatch location download#

It treats each subfolder as a separate package, rather than a subfolder.Īlthough not related I also found an error with the mediafire plugin (at least that's where I've noticed this issue most) that if the file has an "[" (ignore quotations) in the name (off the top of my head the "[" not being closed but I'm not sure if that affects it), it will break as jdownloader will append a bunch of data relating to the download into the filename, requiring the user to manually rename the file before it can properly download. It does not output the directory recursively, but will instead take: **External links are only visible to Support Staff****External links are only visible to Support Staff** It seems that the implementation works but perhaps the plugins do not. I'm dealing with a variety of hosts (mediafire, mega, dropbox, off the top of my head) so I didn't give a link.

Hi thanks, I'm not sure if the feature has been fully implemented but Jdownloader seems to recognize it. I've just tested with mega folder links and it is working fine for me The new variable from helps to append values and not replace/set them Set downloadDirectory to /Your crawljob must use Just doing a google search for "setBeforePackagizerEnabled" comes up with 0 results and same with a forum search, and I don't know what this option does (it doesn't seem to do anything).

It's a little annoying trying to learn this program by googling and searching through threads.

I tried appending to downloadFolder=C:\DOWNLOADFOLDER\ but jdownloader didn't parse it correctly, thinking it wanted a subfolder called, which is of course an invalid file name.Īlso writing a documentation might be helpful. With C:\DOWNLOADFOLDER\ being specified in the crawljob file. So basically if the download link leads to a folder with:Ĭ:\DOWNLOADFOLDER\folder1\folder1a\file.ext If I add overwritePackagizerEnabled=FALSE, then it downloads to the default download folder listed in general settings, even though packagizer has no rules for download directories (apart from "Create subfolders on package name" and "Adopt folder structure", the latter of which I want). It works fine, but it doesn't preserve the online folder structure.

0 kommentar(er)

0 kommentar(er)